Resilient PCI to Non-PCI Connectivity on GCP with Private Service Connect Interfaces

Magic of Virtual PSC Interfaces

Designing PCI-compliant infrastructure often brings one major challenge: enabling secure communication between PCI and non-PCI workloads without breaking strict segmentation rules. A recent conversation with a colleague sparked some interesting requirements around this exact problem.

On the networking side, one of the main requirements is pretty clear: PCI and non-PCI workloads shouldn’t communicate freely without inspection and policy controls in place. The two environments need to stay segmented, with all traffic flowing only through designated firewall enforcement points. In many cases, communication tends to flow from the PCI side, with non-PCI workloads only responding rather than initiating connections. In our conversation, though, this wasn’t just a general best practice — it was a specific requirement we needed to design around.

Typically, architects place Network Virtual Appliances (NVAs) (such as those from Palo Alto Networks, Fortinet, or Check Point) between internal passthrough Network Load Balancers (ILBs) across the involved VPCs. They would then use static routes to control and direct traffic flow between the two sides. The twist in my case was that static routes weren’t an option and the design needed something more resilient to handle potential NVA failures. The goal was to keep traffic flowing smoothly without waking someone up for an incident in the middle of the night.

As I started thinking through the problem, something I’d come across recently came to mind — Private Service Connect Interfaces. This feature turned out to be exactly what I needed. According to the official documentation, a Private Service Connect interface lets a producer VPC network initiate connections to various destinations in a consumer VPC network. These networks can even live in different projects or organizations. This solves a key issue with using standard multi-NIC VMs as our PCI zone and non-PCI zone are in different GCP projects (a limitation of standard multi-NIC VMs is that you need to have all of your NICs in VPCs belonging to the same GCP Project). In our case, the producer VPC network is the PCI VPC network and the consumer VPC network is the non-PCI side.

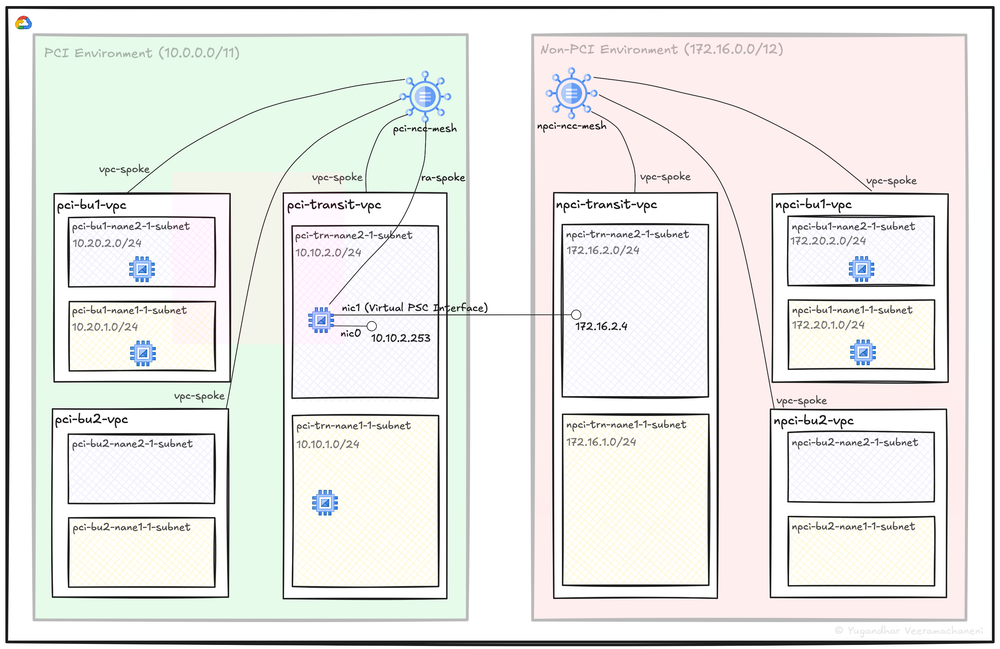

I was eager to see how this would work in practice, so I set up a quick test in my lab environment. The setup looks something like this:

Image: Lab set-up of PCI and non-PCI networks, a multi-NIC VM and Network Connectivity Center

Image: Lab set-up of PCI and non-PCI networks, a multi-NIC VM and Network Connectivity Center

In the setup, there are two separate environments: PCI and non-PCI. I’ve kept them in the same GCP project for simplicity, but they could just as easily live in different projects—or even different organizations. The layout includes a transit VPC and individual VPCs for Business Unit 1 and Business Unit 2 in each environment. The transit and business unit VPCs all connect as spokes to a Network Connectivity Center (NCC) Hub, where I’ve used a full mesh topology to keep things straightforward.

The key piece that brings this design together is the multi-NIC VM deployed in the pci-transit-vpc.

It’s a special kind of setup where the primary interface (nic0) stays within the PCI zone, while

the second interface (nic1) acts as a virtual Private Service Connect interface tied to the non-PCI

zone through a subnet in the same region of the npci-transit-vpc. The beauty of this approach is

that the VM can seamlessly reach both PCI and non-PCI VPCs. The important caveat, though, is that

traffic remains one-way — the multi-NIC VM can initiate connections to resources in the non-PCI zone,

but not the other way around, unless I alter iptables rules and add a static route in every single

non-PCI zone VPC pointing the PCI zone address space to the next-hop of this PSC interface. A

limitation, that turned out to be a positive outcome, if I may add.

The VM itself is a simple Debian instance acting as a router. It accepts packets on nic0, forwards

them over nic1 toward the non-PCI ranges, and performs source NAT using nic1’s interface address.

Straightforward enough. The tricky part is ensuring that other VMs in the PCI zone know to route

non-PCI-bound traffic to this multi-NIC VM’s nic0 interface. There are two ways to handle that:

- Add static routes in every PCI VPC spoke, pointing the non-PCI ranges to a passthrough Internal Network Load Balancer in front of our multi-NIC VM’s

nic0as the next hop. - Skip static routes entirely and register the multi-NIC VM as a Router Appliance spoke in the PCI NCC Hub.

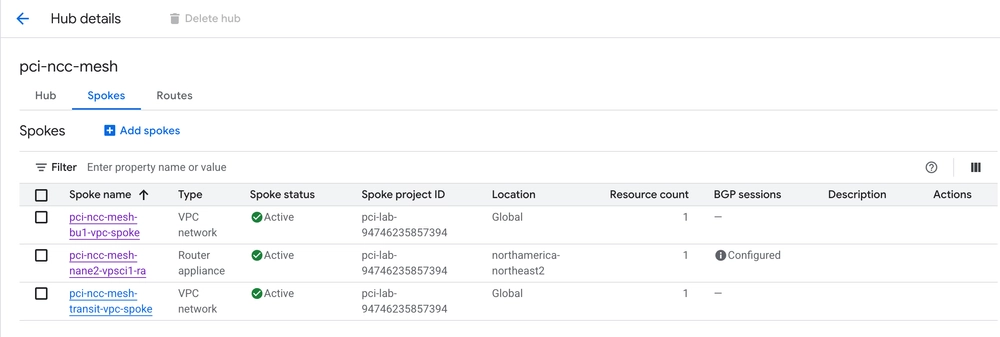

I went with the second option for several reasons. The goal was to avoid manual intervention in the event of an NVA failure and to prevent the operational overhead of maintaining static routes. Since NCC doesn’t propagate local static routes from a VPC spoke to others, converting this to a dynamic route was the only way to ensure NCC could distribute it automatically across all spokes.

Image: NCC Hub spokes for PCI zone; consisting of two or more VPC spokes (one for transit, others for business units), and a Router Appliance (for BGP with NVAs)

Image: NCC Hub spokes for PCI zone; consisting of two or more VPC spokes (one for transit, others for business units), and a Router Appliance (for BGP with NVAs)

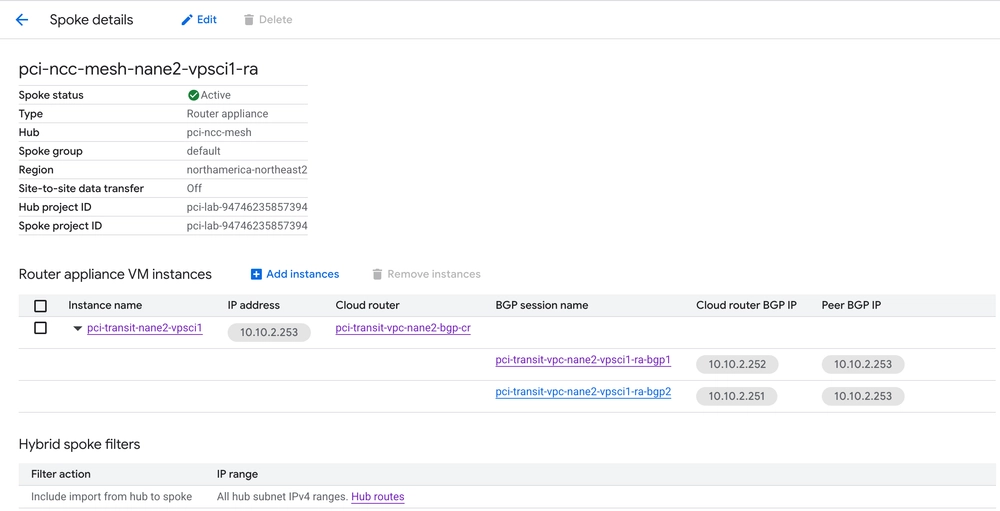

Image: Detailed view for Router Appliance with two BGP sessions

Image: Detailed view for Router Appliance with two BGP sessions

The router appliance has two roles:

- Advertise the non-PCI zone IP ranges to the NCC Hub to facilitate routing.

- Within the VM, for non-PCI IP ranges, have appropriate iptables rules to accept the traffic on

nic0and forward it throughnic1while masquerading tonic1’s interface address postrouting.

For point 1, I have configured the BIRD daemon with a static route for non-PCI zone pointing to the next-hop of the PCI zone interface of the VM. I have also exported this to the kernel routing table so that the VM itself knows how to get to the entirety of the non-PCI zone (via non-PCI NCC Hub).

For point 2, I have set up iptables within the VM appropriately to fulfil the packet routing and

translation requirements.

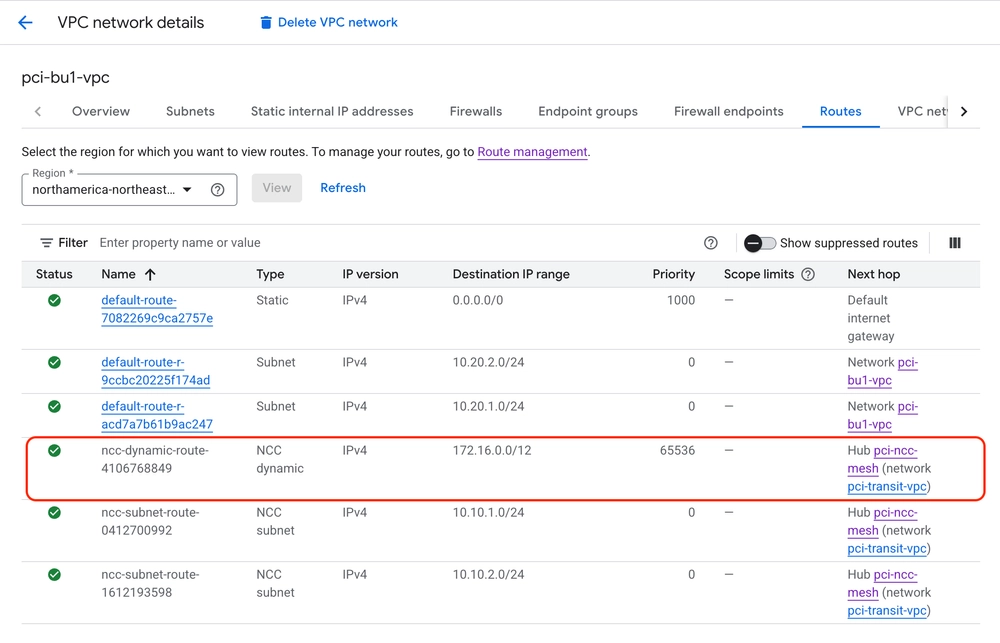

Below, you can see the dynamic route show up in PCI business unit VPC because the NCC hub is able to distribute subnet routes and dynamic routes it sees.

Image: Dynamic route advertised by the Router Appliance spoke is seen in a business unit VPC spoke

Image: Dynamic route advertised by the Router Appliance spoke is seen in a business unit VPC spoke

With this setup, a VM in PCI zone business unit VPC (pci-bu1-vpc) is able to talk to a VM in non-PCI

zone business unit VPC (npci-bu1-vpc) without any issues. The simple Debian VM I used can be replaced

with a firewall appliance to apply traffic policies and/or packet inspection. Multiple NVAs can be

deployed in each region as Router Appliances and in the event of an NVA failure, the BGP session with

NCC Hub would go down and the route disappears from the NCC route table. The effective routes immediately

converge and traffic shifts as necessary so as not to cause an irrecoverable outage. With global dynamic

routing enabled in the PCI VPC, traffic could even failover to a different region if all Router Appliances

in the local region were down.

The beauty of Virtual PSC Interfaces is that you can reach several types of resources in the non-PCI zone through this interface. Example include:

- VMs

- ILBs

- Other PSC Endpoints

- On-prem through VPNs, Interconnects with/without Cloud NAT for private NAT

- Public Internet using Cloud NAT

- Google APIs (with/without VPC-SC perimeters)

- Resources in other VPCs (either via VPC peering or NCC)

This may not be the silver bullet that solves all problems when it comes to segmentation, but I felt like this is a novel approach that meets the needs of my specific discussion with my colleague and felt like sharing it here. You can tack on additional layers of protection like Cloud NGFW Policy Rules (for example: allow traffic in from PCI zone, but not from non-PCI zone) in GCP in addition to firewall rules within the NVA.

Acknowledgements: I sincerely thank Jonny Almaleh for carefully reviewing a draft version of this post for accuracy and providing some great feedback.

Note: This setup is not an extensive reference architecture that meets/exceeds PCI requirements. However, this can fit into a solution that solves the larger complexities of PCI/Non-PCI communications in GCP.